Introduction:

Generative Adversarial Networks (GANs) have revolutionized the field of artificial intelligence, particularly in generating realistic and high-quality images. One of the key advancements in GANs is the development of style-based generators, which enable more fine-grained control over the generated images. In this article, we will explore the architecture of a style-based generator for GANs, diving into its components, functionalities, and the principles behind its operation.

I. Understanding Generative Adversarial Networks (GANs) A. Overview of GANs: Generative Models and Discriminative Models B. Training Process: Adversarial Learning and Optimization C. Applications of GANs: Image Generation, Style Transfer, and More

II. Evolution of Style-Based Generators A. Traditional GAN Architectures: DCGAN, WGAN, etc. B. Introduction of Style-Based Generators: Improving Image Quality and Diversity C. Motivation Behind Style-Based Approaches: Fine-Grained Control and Manipulation

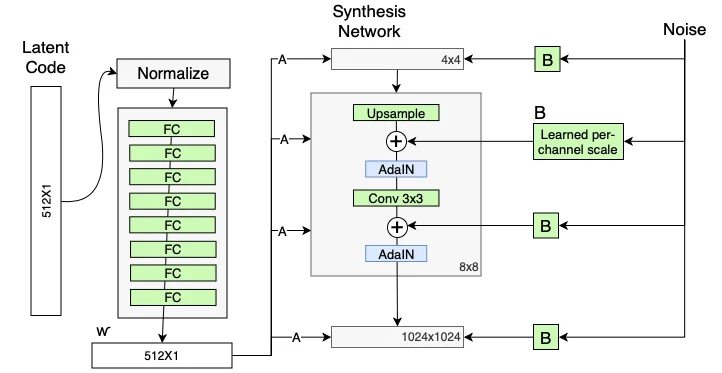

III. Architecture of a Style-Based Generator A. Input Latent Space: Z-Space and W-Space Representation B. Mapping Network: Mapping Latent Space to Intermediate Latent Space (W) C. Synthesis Network: Generating Images from Intermediate Latent Space

IV. Components of a Style-Based Generator A. Mapping Network Architecture: Linear Layers, Activation Functions, and Normalization B. Synthesis Network Architecture: Convolutional Layers, Upsampling, and Pixel Normalization C. Adaptive Instance Normalization (AdaIN): Style Injection Mechanism

V. Style Mixing and Interpolation A. Mixing Regularization: Encouraging Diversity in Generated Images B. Interpolation in Latent Space: Smooth Transition Between Styles C. Controllable Generation: Manipulating Specific Attributes of Generated Images

VI. Training and Optimization A. Loss Functions: Adversarial Loss, Feature Matching Loss, and Path Length Regularization B. Training Stability: Techniques to Improve Convergence and Robustness C. Hyperparameters Tuning: Learning Rate, Batch Size, and Architectural Choices

VII. Evaluation and Validation A. Inception Score (IS) and Frechet Inception Distance (FID) B. Human Evaluation: Subjective Assessment of Image Quality and Diversity C. Domain-Specific Metrics: Task-Specific Evaluation Criteria

VIII. Applications and Use Cases A. Image Generation: Creating Realistic Faces, Animals, and Scenes B. Style Transfer: Translating Images Across Different Styles and Domains C. Data Augmentation: Generating Synthetic Data for Training Machine Learning Models

IX. Challenges and Future Directions A. Mode Collapse: Addressing Issues of Training Instability B. Scalability: Handling Large-Scale Datasets and High-Resolution Images C. Novel Architectures: Exploring New Design Choices and Techniques

X. Conclusion:

Empowering Creativity with Style-Based Generators A. Advancements in Image Generation: From Pixel-Level Manipulation to Style Control B. Potential Impact Across Industries: Art, Fashion, Gaming, and More C. Embracing the Future: Continuing Innovation in Generative Adversarial Networks

Conclusion:

The architecture of a style-based generator for Generative Adversarial Networks represents a significant milestone in the field of artificial intelligence, enabling finer control and manipulation over generated images. By understanding its components, functionalities, and applications, researchers and practitioners can leverage the power of style-based generators to unlock new possibilities in image generation, style transfer, and beyond. As we continue to push the boundaries of creativity and innovation, style-based generators pave the way for exciting advancements in the intersection of art and technology.